In the realm of managing burgeoning data stores, the allure of database sharding often beckons as a panacea for scaling woes. Yet, before delving into the intricacies of this approach, it's paramount to grasp not only what database sharding entails but also when it merits consideration.

What Exactly is Database Sharding?

Database sharding is like spreading out your data over many computers. You do this when one computer isn't enough to handle all the work.

This fits with the idea of horizontal scaling, which is different from the old way of just making one computer stronger (vertical scaling). While making things more complicated might seem cool, sometimes it's better to keep it simple. That's why often just getting a better, stronger computer is the best choice when you can solve the problem that way.

Now, let's chat about how we organize our data. Think of it like organizing your closet: you can separate different types of clothes into different drawers, just like how in microservice setups, each part of your app might have its own "drawer" or database.

Another way to do it is by spreading out the rows of a table across multiple database "drawers." This introduces concepts like sharding keys, which we'll talk about later.

Some newer databases, like Cassandra, handle all this organization behind the scenes, so you don't have to worry about it in your code.

Exploring Alternatives Before Sharding

Before plunging into the realm of database sharding, it behooves one to explore alternative avenues:

Option 1: Status Quo

While sharding might seem like a good idea, if your system isn't struggling or facing limitations, sticking with how things are might be just fine.

Option 2: Vertical Scaling

Augmenting hardware resources by beefing up RAM, CPU cores, or storage capacity presents a viable alternative that circumvents the need for extensive architectural overhauls.

Option 3: Replication

Mermaid Code

flowchart LR Master -- replication --> Replica1; Master -- replication --> Replica2; Replica1 <-- reads --> Client1; Replica2 <-- reads --> Client2; Client --> Master; subgraph Write Load Client end subgraph Main Database Master end subgraph Replicas Replica1 Replica2 end subgraph Read Load Client1 Client2 end

If you mostly need to read your data, replication can make it more available and speed up reading. It's like making photocopies of a book so more people can read it at the same time. This can help avoid the complications of splitting up your data across multiple servers (sharding).

However, if you're writing a lot of new data or changing existing data frequently, replication can make things more complicated. This is because every change needs to be copied to all the copies of the database, which can slow things down.

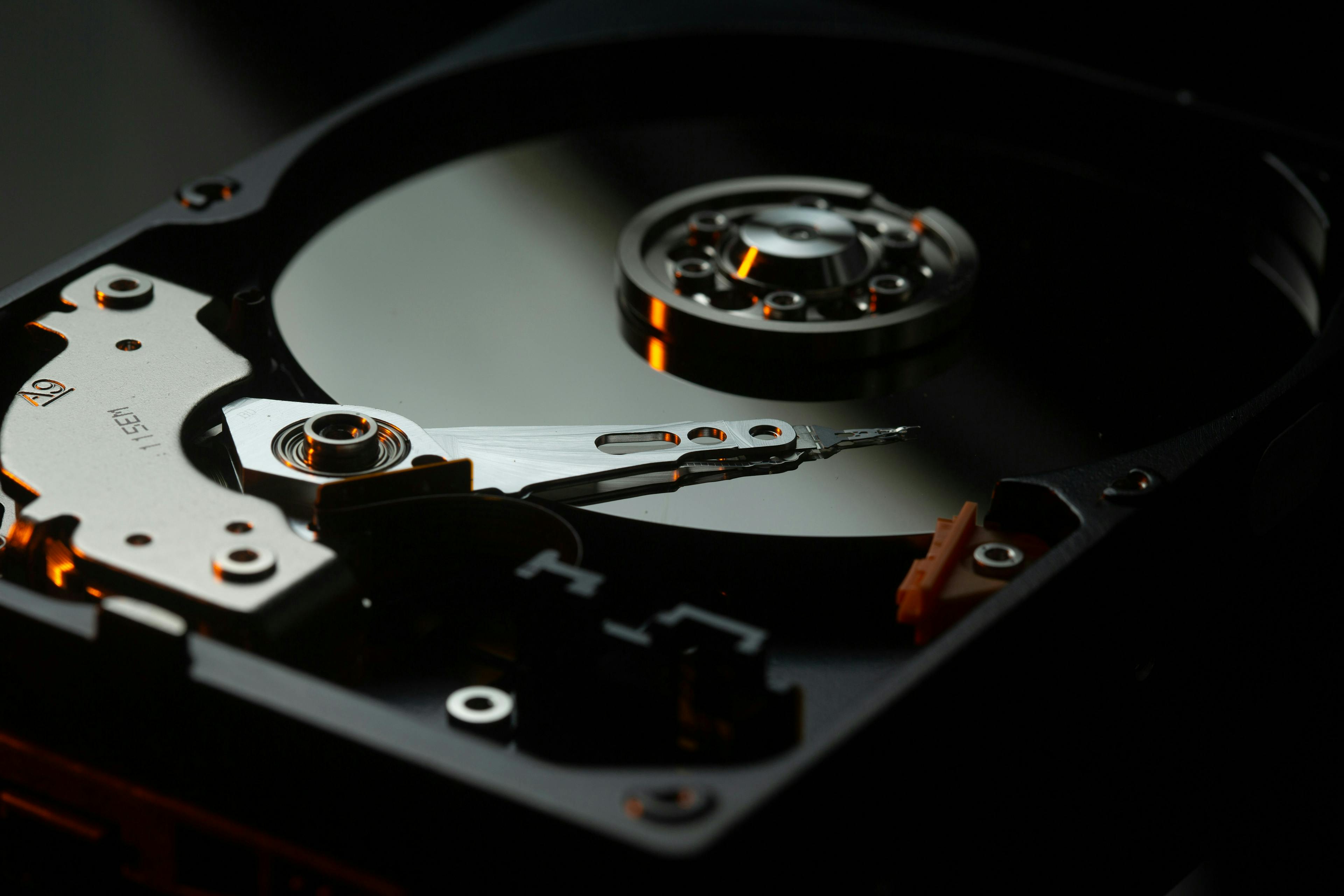

A WAL (write-ahead log) is like a backup diary stored on your computer's hard drive. It's a place where all changes to the database are written down before they're officially added to the database itself. This log is crucial because it helps recover lost data if there's a crash or if something goes wrong during a transaction.

Option 4: Specialized Databases

If your database is running slow, it might be because it's not set up well for the work it needs to do. For instance, using a traditional database for search data might not be the best idea. It could be more efficient to use specialized tools like Elasticsearch for that.

Similarly, storing large files in a regular database might not be efficient. Using something like Amazon S3 for that can be a big improvement. Sometimes, it's better to use specialized services for specific tasks rather than trying to split up your whole database.

Sharding If You Must

Sharding, when necessary, can massively boost your system's capacity, storage, and availability by breaking it into smaller, more manageable pieces. Each of these pieces can then be scaled independently with its own replicas.

However, this comes with some trade-offs. It adds complexity to operations, requires more work for your applications, and can be costly to set up and maintain.

So, how does it actually work?

First, you need to answer some key questions:

- How do we split the data among different shards? We need to make sure the data is evenly distributed to avoid overloading some shards.

- What kind of queries do we run, and how do our tables interact with each other?

- How will our data grow over time, and how will we handle redistributing it later if needed?

Now, let's break down some important terms:

- Shard Key: This is a part of the primary key that tells us how to distribute data. By using a shard key, we can quickly find and modify data by sending operations to the right database.

- Logical Shard: This is a group of data that shares the same shard key. Each logical shard is stored on one node, also called a physical shard.

- Separating Logical Shards: It's important to note that a logical shard can't span multiple nodes; it's like a single chunk of storage. If a shard gets too big for one node, it can cause problems for the whole cluster.

In simple terms, sharding divides your database into smaller pieces for better performance and scalability. Each piece has its own rules for storing and accessing data, helping your system handle more users and data without slowing down.

Sharding Approaches

Sharding databases can be done in different ways, depending on how you want to split up your data.

Key-Based Sharding

Key-based sharding uses a special function (a hash function) to decide where data should go in the database. This means that when you have a specific key for your data, the hash function tells you which part of the database to look in.

With key-based sharding, data distribution relies solely on this hash function. It doesn't consider how much data there is or how much space it takes up. This hashing method helps evenly spread out the data, even if you don't have a perfect way to divide it up.

However, there are some downsides to this approach. It can be tough to change how the data is divided up (resharding), and keeping everything consistent and available can be even trickier.

Range-Based Sharding

This approach requires a lookup table to determine where each chunk of data should be stored. It's crucial to carefully choose the ranges for this division and maintain consistency in the lookup table.

When picking a shard key for range-based sharding, it's important to choose one with high cardinality, meaning it has a large number of possible values. For example, a key with values like North, South, East, and West would have low cardinality because there are only four options. Ideally, you want a key with a wide range of values to ensure even distribution across shards.

If too much data falls within a narrow range of values, some shards may become overloaded (hotspots). You can test this by simulating different scenarios with actual data to see how evenly the data is distributed among the shards.

Relationship-Based Sharding

In this approach, related data is stored together on one physical shard. For instance, in a relational database, data for a user and all associated information might be kept on the same shard. In an application like Instagram, this could include posts and comments.

By grouping related data together, you can improve consistency within each shard and reduce the need for queries that span multiple shards. This means that operations involving related data can be faster and more reliable.

Cross Shard Transactions & Two-phase commit

Cross-shard transactions introduce complexities when you need to perform transactions that involve multiple shards. Even with careful planning, as a service or application becomes more long-lived, you're likely to encounter these types of transactions.

This essentially means you require transaction guarantees provided by an ACID-compliant database, but across shards. The challenge arises because the database can't ensure such compliance when the data you're working with spans multiple shards.

This scenario is often referred to as a global transaction, where multiple sub-transactions must coordinate and succeed. Generally, the longer a transaction is open, the more potential for contention and failure.

One common approach to manage cross-shard transactions is the two-phase commit method:

- Initiation: The leader writes a durable transaction record indicating a cross-shard transaction.

- Participation: Participants write a permanent record indicating their willingness to commit and notify the leader.

- Commitment: The leader commits the transaction by updating the durable transaction record after receiving responses from all participants. If no one responds, it can abort the transaction.

- Finalization: Participants update their state after the leader announces the commit decision. They remove the staged state if the leader aborts the transaction.

However, implementing the two-phase commit is challenging in practice. It can lead to write amplification, lock contention, and application instability due to excessive writes. Additionally, the database must filter every read to ensure it doesn't access state dependent on a pending cross-shard transaction, affecting all reads in the system, even non-transactional ones.

In Conclusion

Database sharding emerges as a compelling solution for managing vast datasets with voracious read-and-write demands. However, its implementation warrants careful deliberation, weighing the benefits against the inherent complexities. Before embarking on the sharding odyssey, introspection is key to ascertain whether the gains outweigh the associated costs or if simpler solutions suffice.